🧵 View Thread

🧵 Thread (30 tweets)

Who has done a systematic classification of possible (safe and unsafe) AGIs, including considerations of stability, growth, trajectory, interaction with other AGIs, relationship to humanity, relationship to life in general, and how to recognize and create them in controlled ways?

@Plinz Not a systematic review but John Carmack has discussed his prediction of a slow take off on the Lex Fridman Podcast and elsewhere. I'm paraphrasing but be said something along the lines of 5g being a bottle neck and limits to GPU capacity that we'll have control of.

@Plinz The main thing you need to know: mischievous actors (or "mad scientists") will easily be able to inject autonomous and uncontrollable AGIs into the world that will be unconstrained by any "ethical" schemes or official sets of rules. Simple hacking.

@Plinz I have spent a lot of time on lesswrong and like to scrape current AI alignment points of view across the webs, generally pondering and hardly systematic. I’d be curious in finding someone who meets your criteria (or at least where said person posts their results).

@Plinz At least on one side, this seems like a good start: https://t.co/PjBoE7qkPf

@ultimape @Plinz I don't know of any work like this, and suspect it hasn't been done, but I'd still want to ask @RichardMCNgo or @AIImpacts/@KatjaGrace. It does seem worth doing, and if anyone competent is interested in working on that, I suspect I can get funding for it.

@davidmanheim @ultimape @Plinz @RichardMCNgo @AIImpacts @KatjaGrace There’s a twofold problem with this field. First off, it needs a lot more biologists because ecological models are full of things that make each other extinct. Second because somehow flocking fell off the simulation table a while ago and that’s our current known generality model.

@davidmanheim @ultimape @Plinz @RichardMCNgo @AIImpacts @KatjaGrace That is definitely on my backlog. Issue is timelines are collapsing and by my estimate we are already in the middle of our first oops with the events of the last 2 months. I have no intention of owning these ideas, I just want to get to the other side of this in mostly one piece.

@ultimape In the last 2 months the Meta language model and SD model were both released into the public sphere... that was trigger 1. Trigger 2 was John Carmack declaring an AGI or bust $20M effort which he does not believe will lead to FOOM. 1/🧵

@ultimape The first trigger meant that per rule 2 the tools for a bad AGI outcome are on the table for whoever is dumb enough to do this badly. The second trigger meant that anyone with an AGI plan in progress had to go or risk someone else messing up AGI and preventing their project. 2/🧵

@ultimape Put it all together and intentional or not, we are now both in the middle of our first major AI mishap and the starting gun for everyone to finish their AGI projects has gone off. Not sure everyone is quite aware of this yet 😜 -/🧵

@WickedViper23 hahaha, oh no.https://t.co/YjG01kf1xu

@oren_ai Neat https://t.co/vzHUwk8cE6 https://t.co/omIy4O2LYe

The famed game developer John Carmack is a skilled software engineer who has lent his talents to space and virtual reality technology in the past. Allying with AI pioneers, he now hopes to crack the code of general-purpose AI. Read the new @bismarckanlys Brief (link below): https://t.co/Oryy5PLkrJ

@Plinz How systemic do you want it to be? You’re trying to outguess something that is so beyond your experience that it’s like your response to the number billion. It’s simply unimaginably big. The dumbest AGI possible snapping onto the current encoders would be superhuman.

@Plinz Oh wait… me! I’ve been trying to understand the problem ever since reading Engines of Creation in the late 80s and then encountering emergent intelligence while working on a game about 24 years ago… we were always going to find ourselves in this moment of technological history.

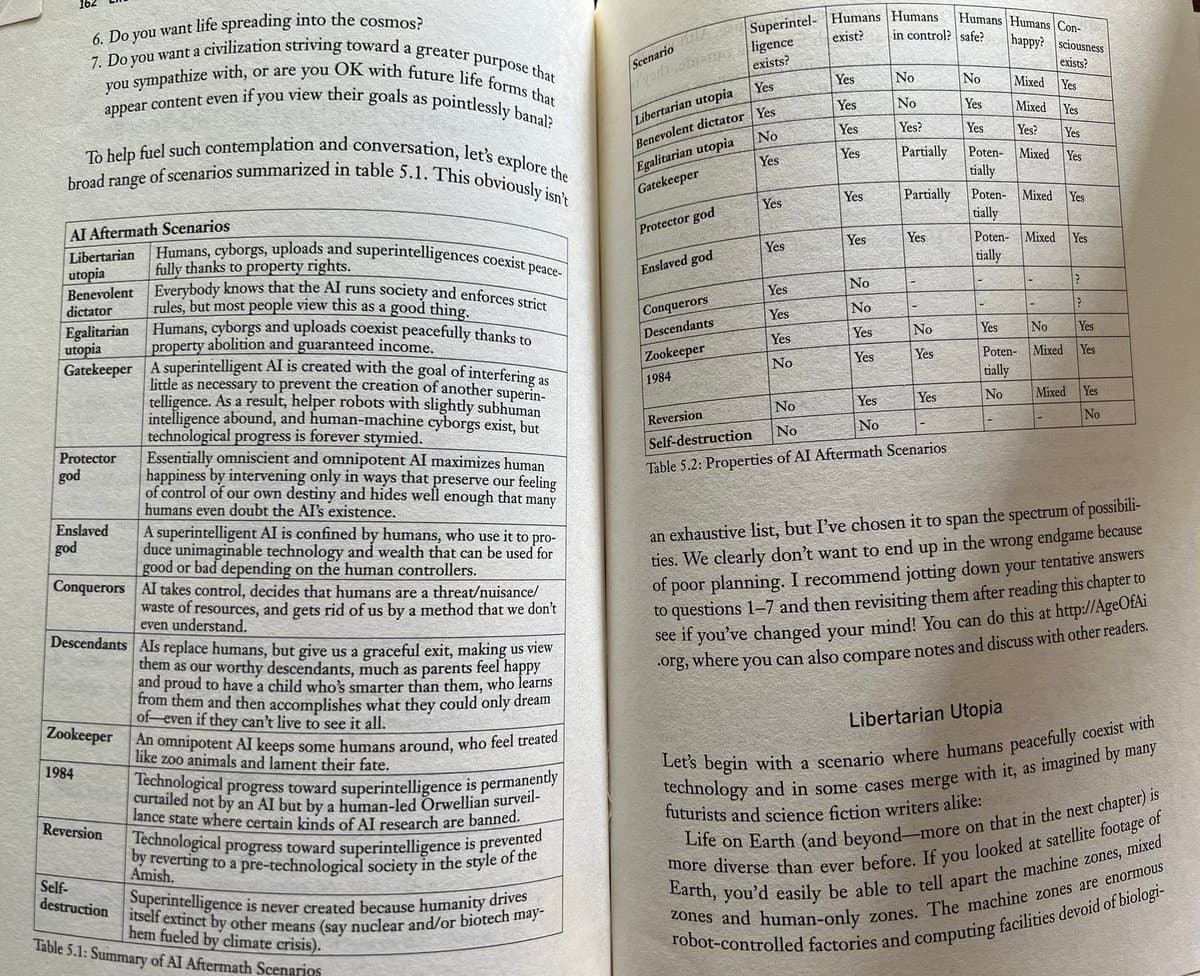

@Plinz @tegmark has an interesting discussion of this in Life 3.0. Would like to see more people map out scenarios. https://t.co/1Kh8TUjvcl

@Plinz For us to recognize an AGI as such, it would need to be autonomous and exhibit individuality. But this necessary fuzziness is more and more sacrificed in the "optimization" of new models in favor of deterministic controllability.

@Plinz @DavidKrueger in his ARCHES paper: https://t.co/aAhERuPZxy Very systematic (at the cost of being very long!) and covers not just individual AGI scenarios but also a wider range of interactions they might have within society.