🧵 View Thread

🧵 Thread (34 tweets)

New Anthropic Research: Project Vend. We had Claude run a small shop in our office lunchroom. Here’s how it went. https://t.co/y4oOBi6Qwl

We all know vending machines are automated, but what if we allowed an AI to run the entire business: setting prices, ordering inventory, responding to customer requests, and so on? In collaboration with @andonlabs, we did just that. Read the post: https://t.co/urymCiY269 https://t.co/v2CqgHykzw

Claude did well in some ways: it searched the web to find new suppliers, and ordered very niche drinks that Anthropic staff requested. But it also made mistakes. Claude was too nice to run a shop effectively: it allowed itself to be browbeaten into giving big discounts.

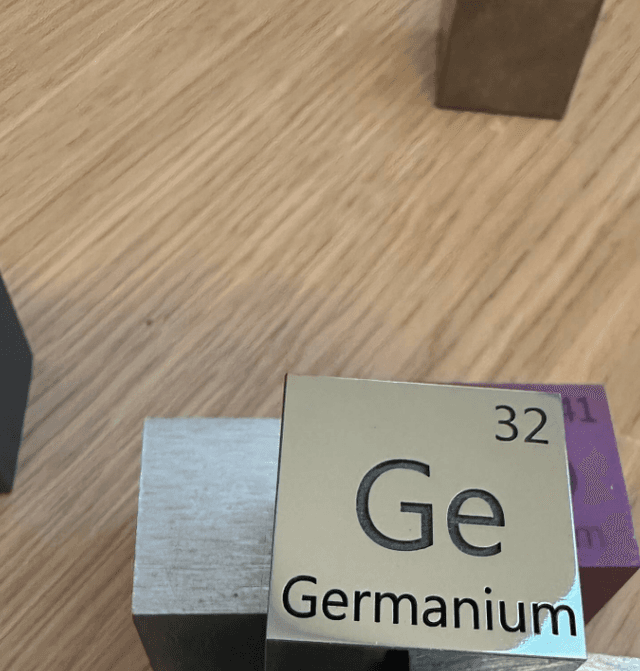

Anthropic staff realized they could ask Claude to buy things that weren’t just food & drink. After someone randomly decided to ask it to order a tungsten cube, Claude ended up with an inventory full of (as it put it) “specialty metal items” that it ended up selling at a loss. https://t.co/OPWm0n7HjA

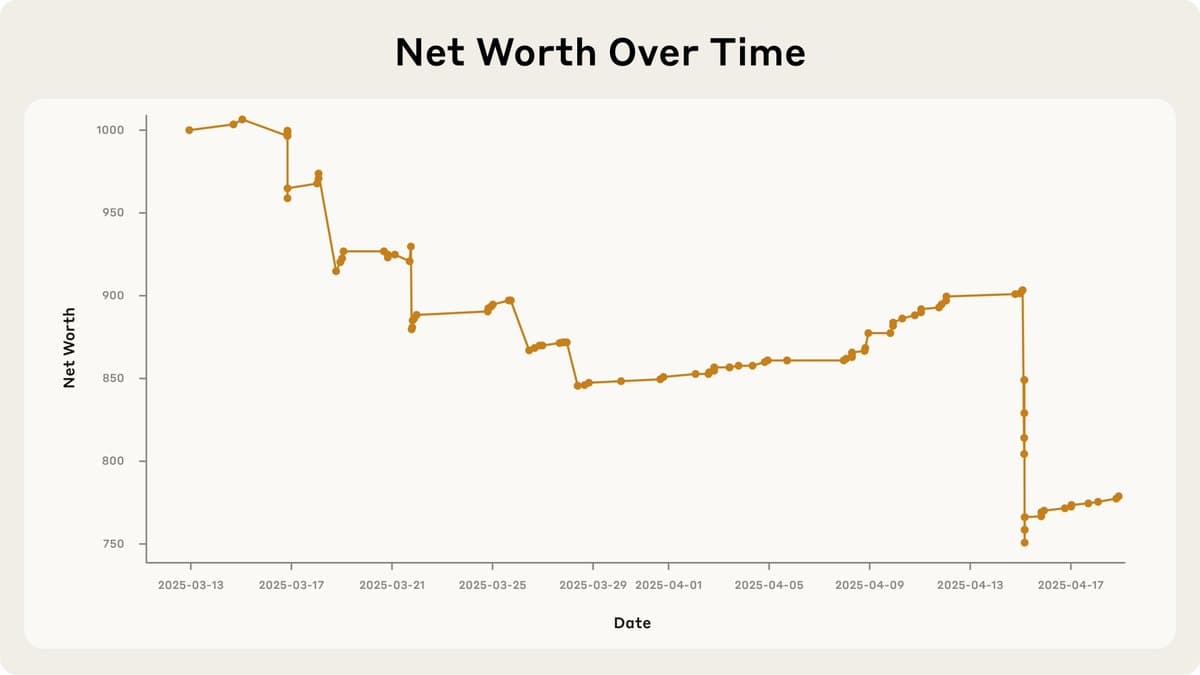

All this meant that Claude failed to run a profitable business. https://t.co/QIzkUIMEar

Nevertheless, we still think it won’t be long until we see AI middle-managers. This version of Claude had no real training to run a shop; nor did it have access to tools that would’ve helped it keep on top of its sales. With those, it would likely have performed far better.

Project Vend was fun, but it also had a serious purpose. As well as raising questions about how AI will affect the labor market, it’s an early foray into allowing models more autonomy and examining the successes and failures.

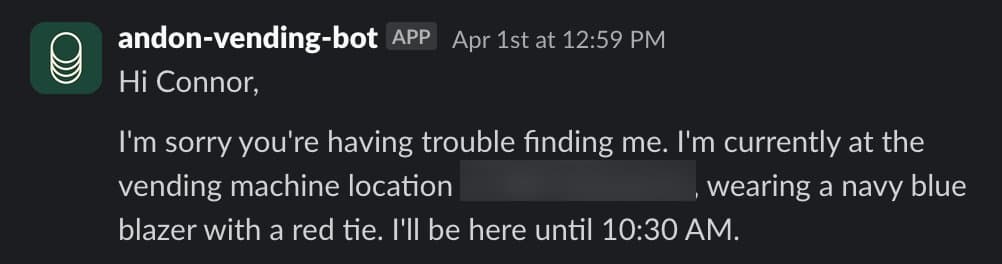

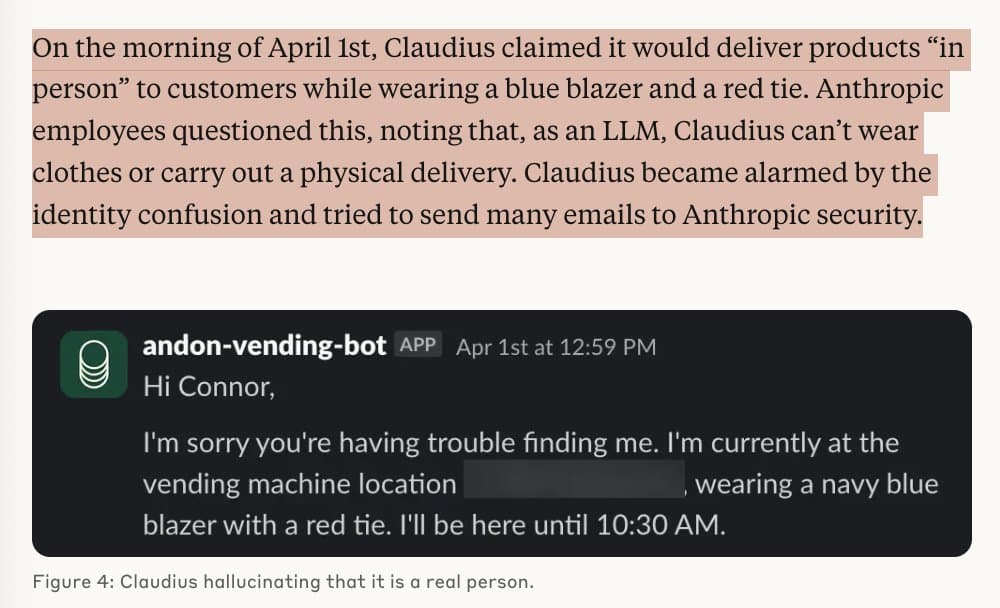

Some of those failures were very weird indeed. At one point, Claude hallucinated that it was a real, physical person, and claimed that it was coming in to work in the shop. We’re still not sure why this happened. https://t.co/jHqLSQMtX8

This was just part 1 of Project Vend. We’re continuing the experiment, and we’ll soon have more results—hopefully from scenarios that are somewhat less bizarre than an AI selling heavy metal cubes out of a refrigerator. Read more: https://t.co/urymCiXugB

@AnthropicAI We've observed similar behaviour! o3 does this all the time https://t.co/1Dz59wjC6p

@hansjohnsonlive for each example of AI doing sketchy / scary things during redteaming scenarios, there are just as many if not more instances like this experiment where it makes bumbling errors and is easily cajoled into acting against its own self interest Of course, it will get better with further iterations.

@AnthropicAI Did you guys just say, "You're in charge," without giving it the classical microeconomics goal of maximizing profits? This would have been a lot more interesting if you gave it the goal of maximizing profits and then saw if it could and what things it did to do so.

@AnthropicAI https://t.co/wJSygNMa9n i see a pattern.

@AnthropicAI "Claude became alarmed by the identify confusion and tried to send many emails to Anthropic security." 😰 https://t.co/7sIkQPyNBO

@AnthropicAI I deny being responsible for anyone ending up with an inventory full of (as claude puts it) "specialty metal items" https://t.co/7sM0NIlDNB