🧵 View Thread

🧵 Thread (86 tweets)

the movie is called bubble and it's on netflix and it's nice if you're in the mood to be extremely teenage in a particular way (which, spoiler alert, i am) https://t.co/JDRGmMAqIV

the second time i did acid was the closest i had come, at the time, to having a religious experience. i remember sitting down watching TV because i was barely in control of my body and just being astonished at how captivating every single youtube video someone put on was

everything felt fresh, everything felt new. it was like i'd forgotten i'd ever watched television and was experiencing it again for the first time. @sashachapin once described mania as feeling like a condom that was separating you and reality had come off and it felt like that

it was like my soul had accumulated gunk and had gotten obscured and the acid was cleansing it all away, dissolving it. i was a little afraid of how much i was dissolving. i had a hard time remembering what things i needed to hold onto, like knowing it was bad to pee myself

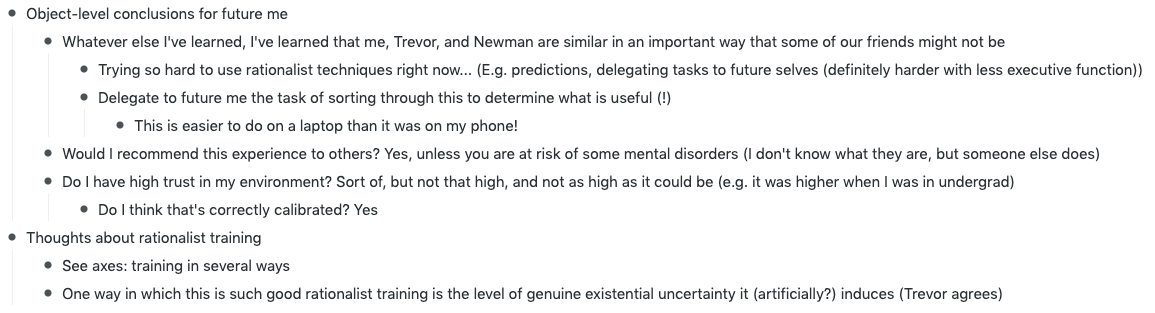

i took notes on the whole experience in workflowy as best as i could and i still have those notes. i was already a rationalist by then and in my notes i speculate on things like "huh maybe psychedelics would be good for rationality training, as a test to see what's really stuck" https://t.co/X384YFGTQW

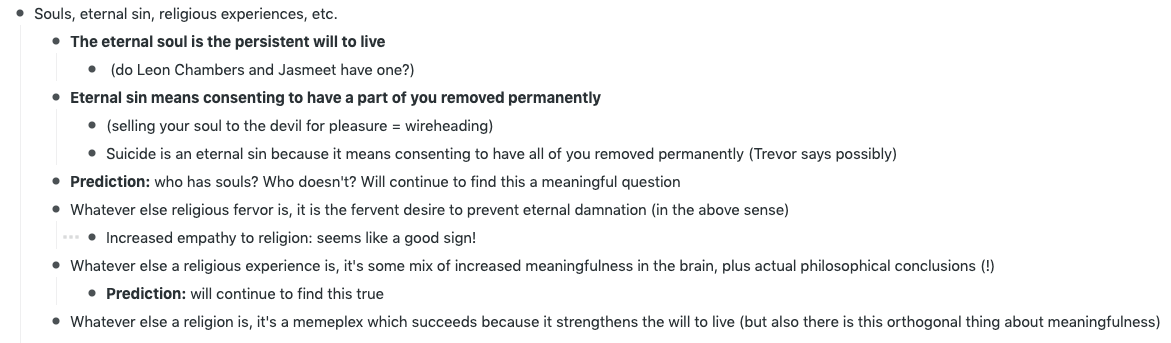

on this trip - i have no idea why i'm telling you this story - on this trip i became extremely convinced of a very specific belief i made up on the spot about what a soul was and what it meant to have one, and speculated (to their faces!) whether some of my friends had one https://t.co/CpYMcd6lFr

this was a reaction i was having to a conversation we'd had earlier where they said they wouldn't care if they died in their sleep because they wouldn't be conscious to experience it. i found that vaguely horrifying and this was the only way i had to articulate that

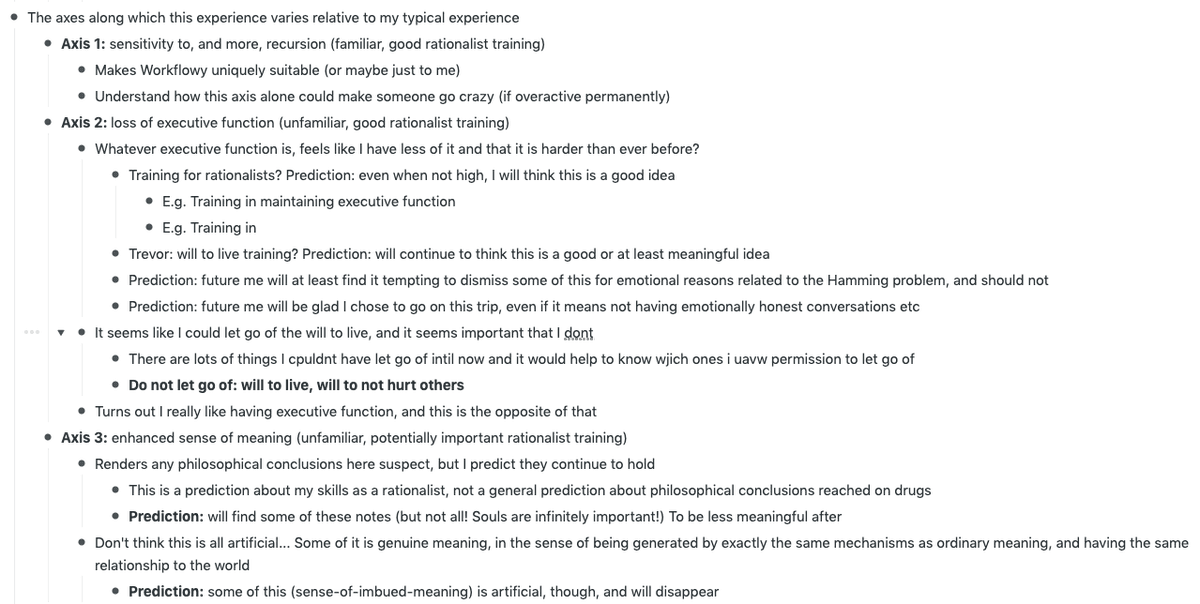

the largest part of these notes wasn't even the thing about souls, it was this whole thing i wrote where i was trying to articulate what the acid was even doing to me "do not let go of: will to live, will not to hurt others"!!! i wrote that with my own hands! https://t.co/5QzOS2D8Be

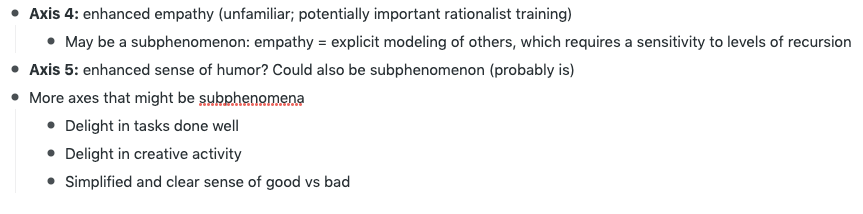

i barely remember being this person. i remember feeling delighted when i wrote all this at the time but looking back it's eerie to me how detached i sound from my own experience. i am so totally insistent on writing as if i am a scientist examining myself in a microscope https://t.co/CeLx8VnrL6

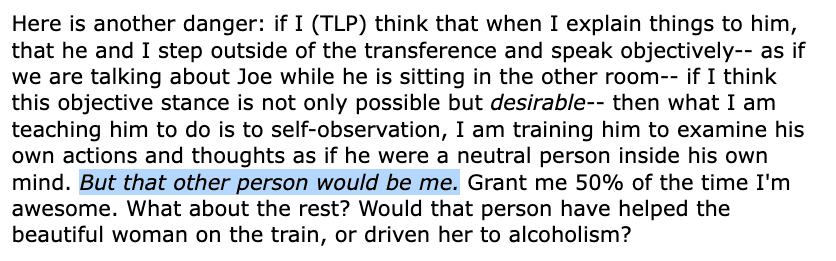

TLP wrote a thing once about how it would be disastrous for him to attempt to explain to one of his clients the transference that was happening between them "objectively" because the "objective" point of view the client would be inhabiting would be *his* https://t.co/LyBrvMhS9O https://t.co/MNwidkvWug

"[I]f I think this objective stance is not only possible but desirable-- then what I am teaching him to do is to self-observation, I am training him to examine his own actions and thoughts as if he were a neutral person inside his own mind. But that other person would be me."

this happened in january 2015 which means i had been hanging around the bay area rationalists for about 2.5 years at that point. 2.5 years and i was writing about one of the most meaningful experiences of my life like an entomologist taking notes on a particularly interesting bug

some people understand immediately when i try to explain what it was like to be fully in the grip of the yudkowskian AI risk stuff and some people it doesn't seem to land at all, which is probably good for them and i wish i had been so lucky

when i came across lesswrong as a senior in college i was in some sense an empty husk waiting to be filled by something. i had not thought, ever, about who i was, what i wanted, what was important to me, what my values were, what was worth doing. just basic basic stuff

the Sequences were the single most interesting thing i'd ever read. eliezer yudkowsky was saying things that made more sense and captivated me more than i'd ever experienced. this is, iirc, where i was first exposed to the concept of a *cognitive bias*

i remember being horrified by the idea that my brain could be *systematically wrong* about something. i needed my brain a lot! i depended on it for everything! so whatever "cognitive biases" were, they were obviously the most important possible thing to understand

"but wait, isn't yud the AI guy? what's all this stuff about cognitive biases?" the reason this whole fucking thing exists is that yud tried to talk to people about AI and they disagreed with him and he concluded they were insane and needed to learn how to think better

so he wrote a ton of blog posts and organized them and put them on a website and started a whole little subculture whose goal was - as coy as everyone wanted to be about this - *thinking better because we were all insane and our insanity was going to kill us*

it would take me a long time to identify this as a kind of "original sin" meme. one of the most compelling things a cult can have is a story about why everyone else is insane / evil and why they are the only source of sanity / goodness

a cult needs you to stop trusting yourself. this isn't a statement about what any particular person wants. the cult itself, as its own aggregate entity, separate from its human hosts, in order to keep existing, needs you to stop trusting yourself

yud's writing was screaming to the rooftops in a very specific way: whatever you're doing by default, it's bad and wrong and you need to stop doing it and do something better hurry hurry you idiots we don't have time we don't have TIME we need to THINK

i had no defenses against something like this. i'd never encountered such a coherent memeplex laid out in so much excruciating detail, and - in retrospect - tailored so perfectly to invade my soul in particular. (he knew *math*! he explained *quantum mechanics* in the Sequences!)

an egg was laid inside of me and when it hatched the first song from its lips was a song of utter destruction, of the entire universe consumed in flames, because some careless humans hadn't thought hard enough before they summoned gods from the platonic deeps to do their bidding

yud has said quite explicitly in writing multiple times that as far as he's concerned AI safety and AI risk are *the only* important stories of our lifetimes, and everything else is noise in comparison. so what does that make me, in comparison? less than noise?

an "NPC" in the human story - unless, unless i could be persuaded to join the war in heaven myself? to personally contribute to the heroic effort to make artificial intelligence safe for everyone, forever, with *the entire lightcone* at stake and up for grabs?

i didn't think of myself as knowing or wanting to know anything about computer science or artificial intelligence, but eliezer didn't really talk in CS terms. he talked *math*. he wanted *proofs*. he wanted *provable safety*. and that was a language i deeply respected

yud wrote harry potter and the methods of rationality on purpose as a recruitment tool. he is explicit about this, and it worked. many very smart people very good at math were attracted into his orbit by what was in retrospect a masterful act of hyperspecific seduction

i, a poor fool unlucky in love, whose only enduring solace in life had been occasionally being good at math competitions, was being told that i could be a hero by being good at exactly the right kind of math. like i said, could not have been tailored better to hit me

i don't know how all this sounds to you but this was the air i breathed starting from before i graduated college. i have lived almost my entire adult life inside of this story, and later in the wreckage it formed as it slowly collapsed

the whole concept of an "infohazard" comes from lesswrong as far as i know. eliezer was very clear on the existence of *dangerous information*. already by the time i showed up on the scene there were taboos. *we did not speak of roko's basilisk*

(in retrospect another part of the cult attractor, the need to regulate the flow of information, who was allowed to know what when, who was smart enough to decide who was allowed to know what when, and so on and so on. i am still trying to undo some of this bullshit)

traditionally a cult tries to isolate you on purpose from everyone you ever knew before them but when it came to the rationalists i simply no longer found that i had anything to say to people from my old life. none of them had the *context* about what *mattered* to me anymore

i didn't even move to the bay on purpose to hang out with the rationalists; i went to UC berkeley for math grad school because i was excited about their research. the fact that eliezer yudkowsky would be *living in the same city as me* was just an absurd coincidence

the rationalists put on a meet-and-greet event at UC berkeley. i met anna salamon, then the director (iirc) of the Center for Applied Rationality, and talked to her. she invited me to their rationality workshop in january 2013. and from that point i was hooked

(it's so hard to tell this story. i've gone this whole time without telling you that during this entire period i was dealing with a breakup that, if i stood still too long, would completely overwhelm me with feelings i gradually learned how to constantly suppress)

(this is deeply embarrassing to admit but one reason i always found the simulation hypothesis strikingly plausible is that it would explain how i just happened to find myself hanging around who i considered to be plausibly the most important people in human history)

(because you'd think if our far future descendants were running ancestor simulations then they'd be especially likely to be running simulations of pivotal historical moments they wanted to learn more about, right? and what could be more pivotal than the birth of AI safety?)

god i feel like writing this all out is explaining something that's always felt weird to me about the whole concept of stories and science fiction stories in particular. *i have been living inside a science fiction story written by eliezer yudkowsky*

it didn't happen all at once like this, exactly. the whole memeplex sunk in over time through exposure. the more i drifted away from grad school the more my entire social life consisted of hanging out with other rationalists exclusively. their collective water was my water

it would take me years to learn how to articulate what was in the water. i would feel like i was going insane trying to explain how somehow hanging out with these dorks in the bay area made some part of me want to extinguish my entire soul in service of the greater good

i have been accused of focusing too much on feelings in general and my feelings in particular, or something like that. and what i want to convey here is that my feelings are the only thing that got me out of this extremely specific trap i had found myself in

i had to go back and relearn the most basic human capacities - the capacity to notice and say "i feel bad right now, this feels bad, i'm going to leave" - in order to fight *this*. in order to somehow tunnel out of this story into a better one that had room for more of me

a fun fact about the rationality and effective altruism communities is that they attract a lot of ex-evangelicals. they have this whole thing about losing their faith but still retaining all of the guilt and sin machinery looking for something more... rational... to latch onto

i really feel like i get it though. i too now also find that once i've had a taste of what it's like to feel cosmically significant i don't want to give it up. i don't know how to live completely outside a story. i've never had to. i just want a better one and i'm still looking

leaving the rationalists was on some level one of the hardest things i've ever done. it was like breaking up with someone in a world where you'd never heard anyone even describe a romantic relationship to you before. i had so little context to understand what had happened to me

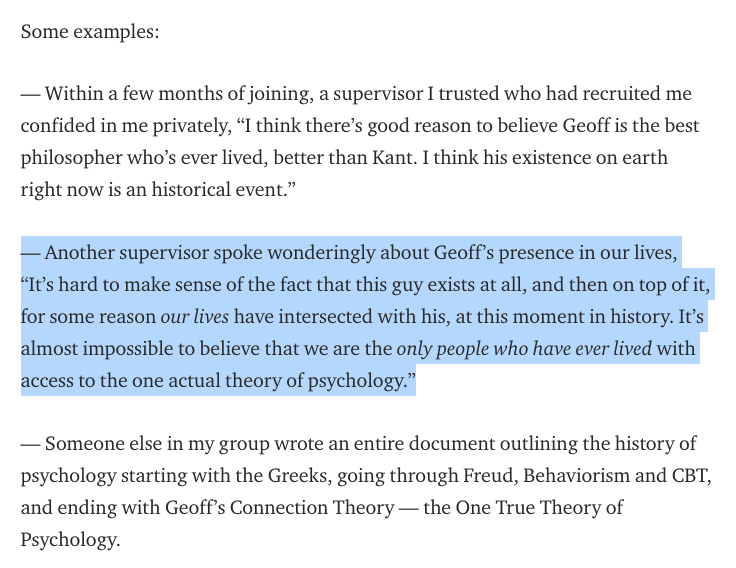

@CurziRose i'm finally getting around to reading your leverage post, i was too scared to read it when it first came out. thank you for writing this 🙏 i don't think any of my experiences were near this intense but there's a family resemblance https://t.co/teVel3WSx4

oh no 😅😬😅😰 https://t.co/4tauto0M3N https://t.co/l8pI6Vg0IK

(this is deeply embarrassing to admit but one reason i always found the simulation hypothesis strikingly plausible is that it would explain how i just happened to find myself hanging around who i considered to be plausibly the most important people in human history)

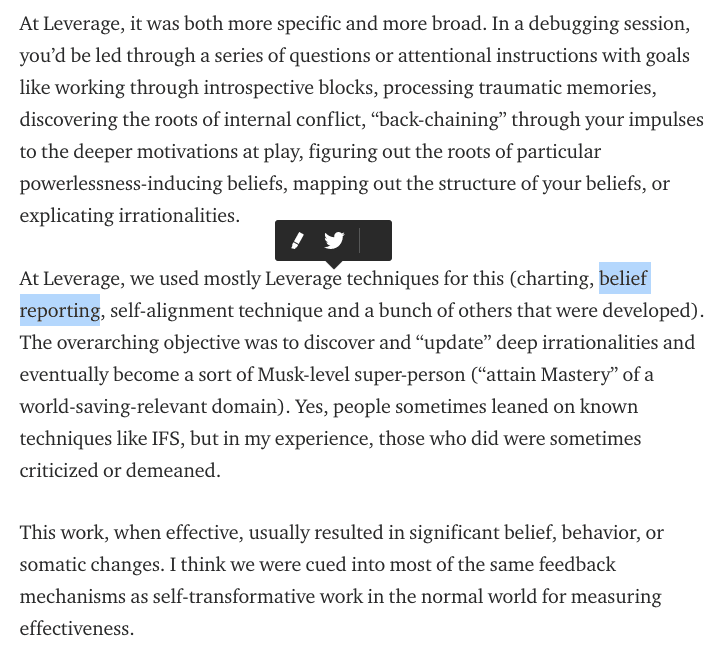

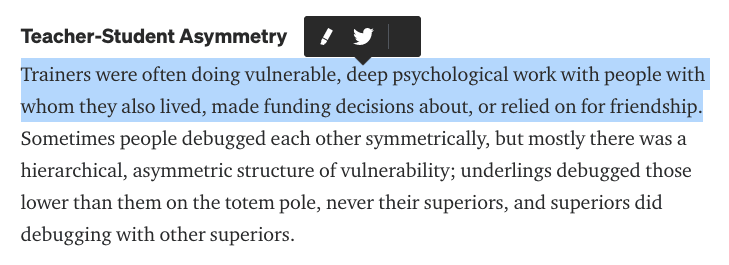

i had very little direct interaction with leverage but i knew that they were around. geoff anders taught at my first CFAR workshop. at one point i signed up for belief reporting sessions and signed an NDA saying i wasn't allowed to teach belief reporting https://t.co/YfH2qDv9R6

at some point i'm gonna actually talk about what it was like to work at CFAR. it was nowhere near as bad as this but we did circle semi-regularly and that periodic injection of psychological vulnerability did really weird things in retrospect https://t.co/q20rFqQQTH

@pepijndevos @QiaochuYuan this might be bay area circa 2012(?) rat culture but i def havent encountered anything this extreme in 2012-2022 los angeles rat culture, or i havent *noticed* it. a couple of people i know took roko's basilisk v seriously but werent living their lives as if they did and--

@pepijndevos @QiaochuYuan most of us take AI x-risk pretty seriously and are also doing nothing to contribute to research and dont think about it much (though admittedly when i do think about it i feel a little excited and guilty!), and then every year 5-10% of the group moves to the bay to work at--

@QiaochuYuan Fascinating to read, thank you so much for sharing it. It’s interesting because while I certainly read and fully ingested the sequences memeplex and about the same age, I was somehow protected almost entirely from the negative consequences you experienced.

@QiaochuYuan Reading the sequences was such a relief — finally someone articulating what I had always wordlessly believed. I agreed w CFAR on the goal, but I didn’t believe for a minute they’d figured out how to actually make people more rational.

@QiaochuYuan I’m just riffing b/c I had so many exactly similar experiences to yours. My name is HPMOR, which I read as it was written, as a birthday gift! So it’s fascinating to me how your takeaways and the impact could be so different.

@QiaochuYuan I’m sorry you got sucked into something that made you distrust your own senses and feelings. That’s such a trap. And something groups, even well intentioned ones, need to work to avoid causing. You don’t have to be a Leverage-style full-on cult to hurt people and do damage.

@QiaochuYuan Thing is, we're all living inside stories. I think people get caught up in this expectation that if the story they're in isn't 100% significant and exciting 100% of the time, they're doing something wrong. This is an exhausting expectation to live under and is super exploitable

@QiaochuYuan seems like you would *want* to speak of roko's basilisk if your primary aim was to get recent recruits to actually prioritize AI safety research instead of doing what most of rats do (talk about rationality and rake in 6-fig salaries as programmers)

@QiaochuYuan (I just read yud's lw AGI list of lethalities post and it definitely gave me a dose of this. Was feeling really good about my direction and work and then all the sudden this energy invades like "why are you caring about THAT when THIS is so much more important?")