🧵 View Thread

🧵 Thread (5 tweets)

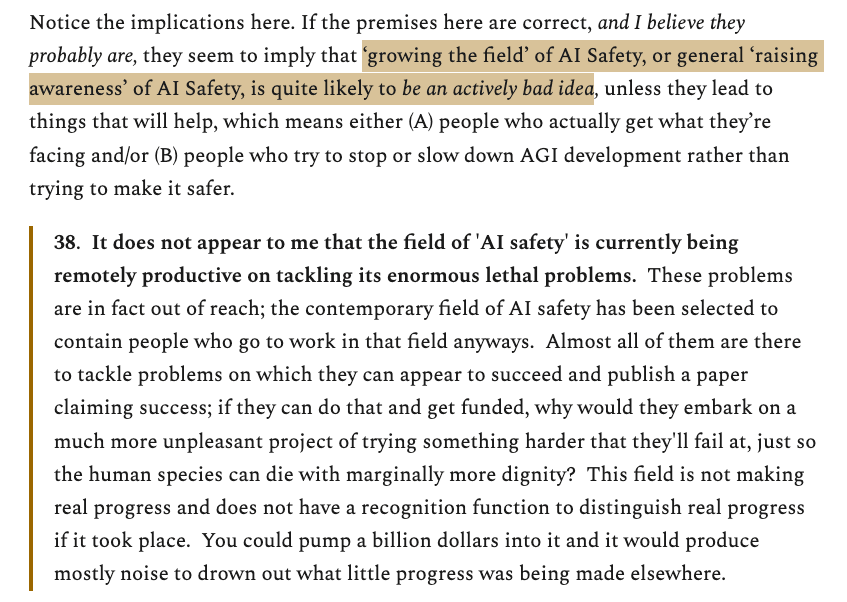

AI safety research” has probably increased AI risk, and doing more of it will probably make things still worse. From @TheZvi’s response (first paragraph) to @ESYudkowsky’s recent doom post (quoted paragraph 38), both of which I found surprisingly interesting. https://t.co/ZP8e5m5nSm

@Meaningness @TheZvi @ESYudkowsky I seriously doubt that risk has increased. The amount of money & talent being poured into advancing AI capabilities is still 3-4 orders of magnitude more than into AI safety even by the broadest definition; any capabilities blowback from safety work is a rounding error.

@Meaningness @TheZvi @ESYudkowsky The point about current safety work "letting people fool themselves about the lethality of the problem" is more plausible. But note that 99% of AI researchers already behave as if there is no problem! Convincing them that there is a problem at all should be the priority.

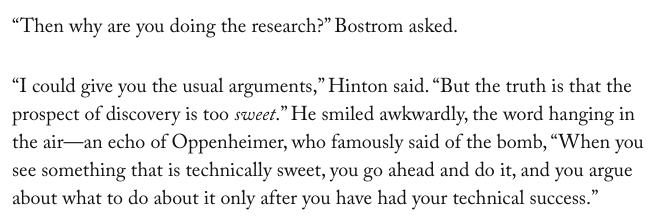

@Meaningness @TheZvi @ESYudkowsky An implicit assumption here is that people working on AI safety are somehow smarter or more able to advance capabilities; this is bunk. Vast majority of the best ML researchers & engineers just don't think about safety - they're motivated by other things. Relevant Geoff Hinton: https://t.co/wrGmlbL3Ut