🧵 View Thread

🧵 Thread (43 tweets)

Excellent post about metaphor design! This phrase really struck me... "𝘗𝘳𝘰𝘨𝘳𝘢𝘮𝘮𝘪𝘯𝘨 𝘪𝘴 𝘫𝘶𝘴𝘵 𝘢 𝘨𝘪𝘢𝘯𝘵 𝘴𝘵𝘢𝘤𝘬 𝘰𝘧 𝘮𝘦𝘵𝘢𝘱𝘩𝘰𝘳𝘴." I love metaphors, I'm great at metaphors... and I've had a very uneasy relationship with programming. What's up w/that? https://t.co/T673EdNhJQ

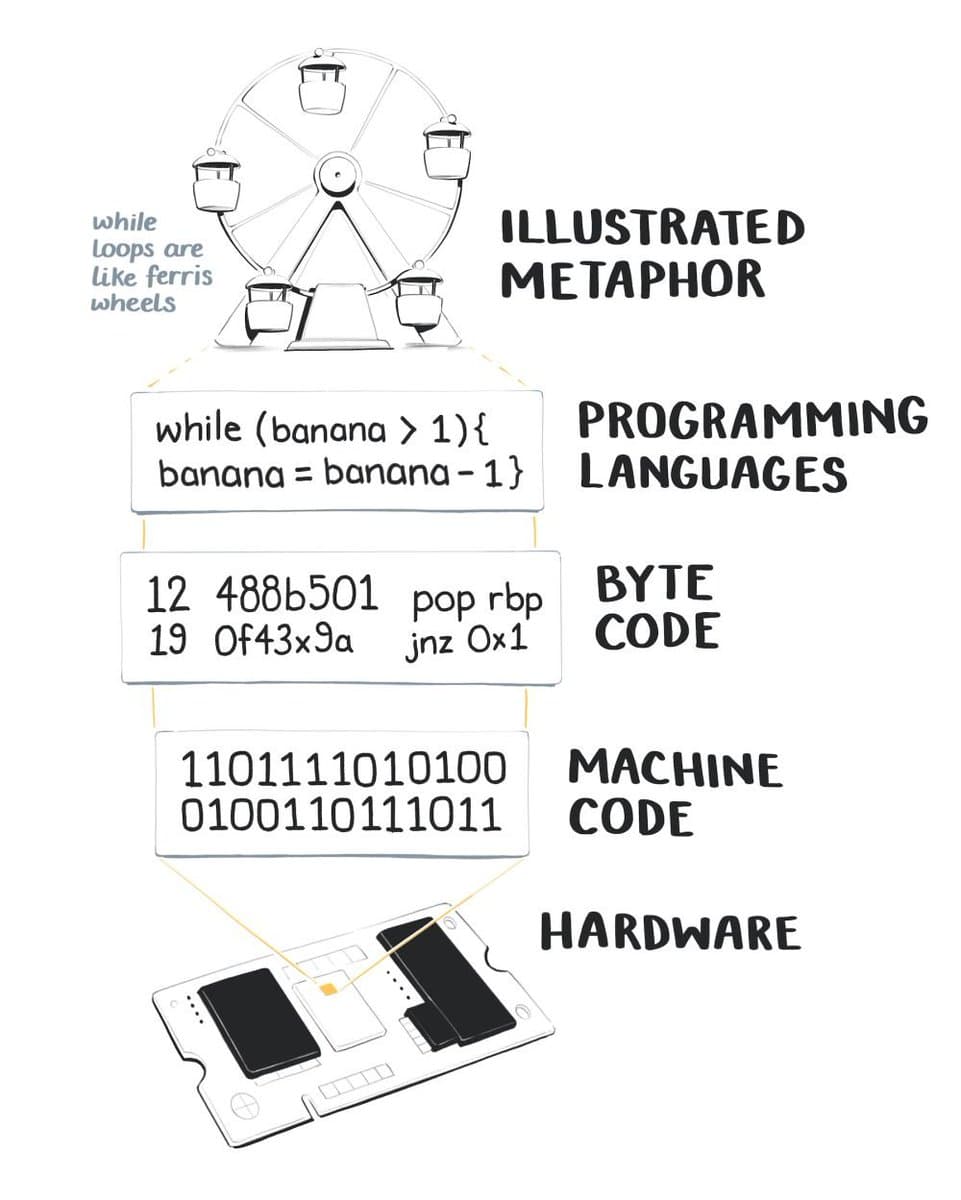

Here's that quote again, in a bit more context & illustrated: "𝘗𝘳𝘰𝘨𝘳𝘢𝘮𝘮𝘪𝘯𝘨 𝘪𝘴 𝘫𝘶𝘴𝘵 𝘢 𝘨𝘪𝘢𝘯𝘵 𝘴𝘵𝘢𝘤𝘬 𝘰𝘧 𝘮𝘦𝘵𝘢𝘱𝘩𝘰𝘳𝘴. Each layer of the metaphorical stack moves us further away from machine world, and closer to human world." https://t.co/pWAIO3nbAo

When I've seen friends dive into pages of greek-laden math equations, or piles of code that looks to me like memorizing arbitrary rulesets, I've felt a sense of panic/despair -- like I'm just not innately suited for that.

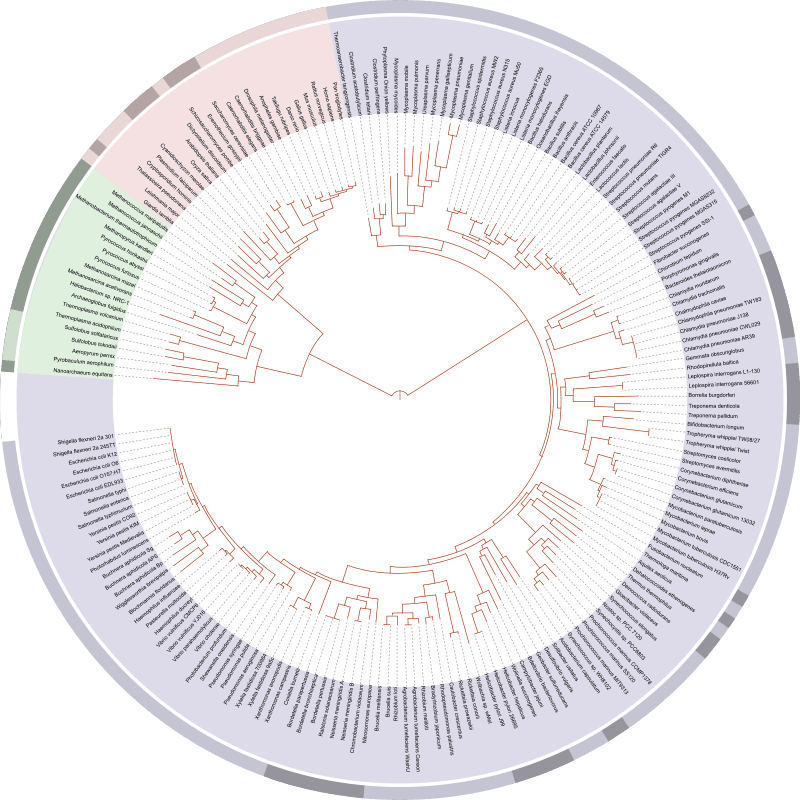

But I have learned large "flat" sets of information (like Spanish vocab), and I'm very good at pattern recognition that fits onto a tree of knowledge (no idea how many plants / fungi / animals I can identify... prob well over 1000?) (img: https://t.co/7dvTYwRN31) https://t.co/EM9E0323V2

I'd probably have a better experience if I was starting with direct visual feedback (like Processing) , or was able to interact with the code in a more spatial / diagrammatic way (like in Blender, probably?) https://t.co/SULhi0pIyv

Or, of course, interacting with code in physical space, like what @worrydream & folks are doing at @Dynamicland1! https://t.co/HCY478RDAE

Probably my worst experience with coding was trying to go too fast through Hartl's Ruby on Rails tutorial, attempting to keep up with a class. I was miserable. I had no idea what was going on, or why something wasn't working. Everything was totally opaque, and I was lost.

Oh, I'm going to jump back to a concept from a few tweets ago -- this piece from Bret Victor from 8 years ago, says that the Processing language isn't in itself an answer for the thing I'm wanting: https://t.co/ZJdsgEwlAI

Could call them "prickles" and "goo", using Alan Watts' terms (which are inseparable; the world is gooey prickles and prickly goo, "and we're always playing with the two"): https://t.co/rp5e20Oj5D

An example of bridging from the "prickly" side: colorized math equations from @betterexplained https://t.co/eqiSPjaF1J

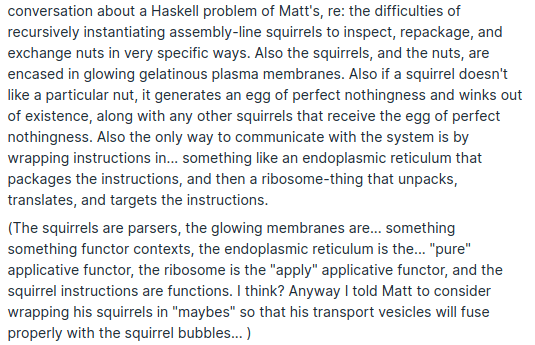

Here's an example of an attempted bridge from the "gooey" side... Was trying to translate @mattoflambda's "zipWith ($) (cycle [id, (*2)])" into something about glowing membrane-encased squirrels sorting nuts on a conveyor belt https://t.co/YNoF2J3qZ9

Now, I don't count that as fully successful, because I was jumping right into the deep end of a Haskell issue, and I didn't have the background knowledge to really understand where the precision points needed to be and where the metaphor / story could remain fluid

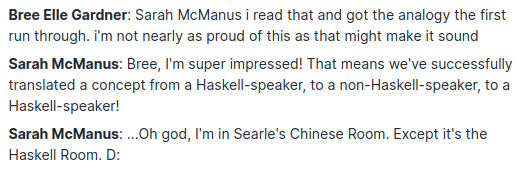

BUT -- it was accurate enough that *another* person who spoke Haskell was able to get the analogy by reading it! https://t.co/TVNcSUcfnY

@SarahAMcManus Fascinating. You make a distinction between “flat” and (god forgive me) “deep” learning. I'd readily identify with the latter. I get nervous when any particular layer gets too broad. Depth is my jam. I haven't seen many other people identify as flat-dominant learners. Have you?

@AskYatharth I think there's one variety of it that's like... quizbowl / Jeopardy / trivia style "flat" learning? Or any feats of memorization... for example, someone who is super jazzed to know all the countries of the world and their capitals + other geography facts

@SarahAMcManus Whereas deep-dominant learners like me lose our footing in flat-learning because it gets too broad, we get nervous we’ll forget, we can’t attach as many spatial/navigational cues. Deep-learning sits/congeals better in our brains.

@SarahAMcManus The core skills then are: solidifying and achieving a depth of intuition with a layer so solid the next layers canbe built on top (deep) marking and navigating wide expanses of learning territory with effective psuedo-spatial cues (flat)

@AskYatharth I like what you've brought in about layers feeling shaky or solid! Reminds me of what @drossbucket has written about cognitive coupling & decoupling: https://t.co/YjbzzVFLFD

@AskYatharth @drossbucket In that framework, I imagine that cognitive decouplers would probably be more able to ignore layers of context that they didn't understand, and focus on manipulating the symbols in the specific question at hand, to get the right answer or to get to the next step.

@SarahAMcManus @drossbucket a bigger part is building on the lower level layers so stably, in way that just fits, it’s obvious *how you might come to construct the system yourself that way* that the higher layers just feel dealing with one layer. not trying to deal with many

@SarahAMcManus @drossbucket also noticing that I am tired and defaulting to masc-coded speech patterns of asserting my opinions and expecting flat contradiction if you disagree and that creates emotional labour if both ppl are not using the same conversatonal mode and I want to call that in ✨ ok 💤😊

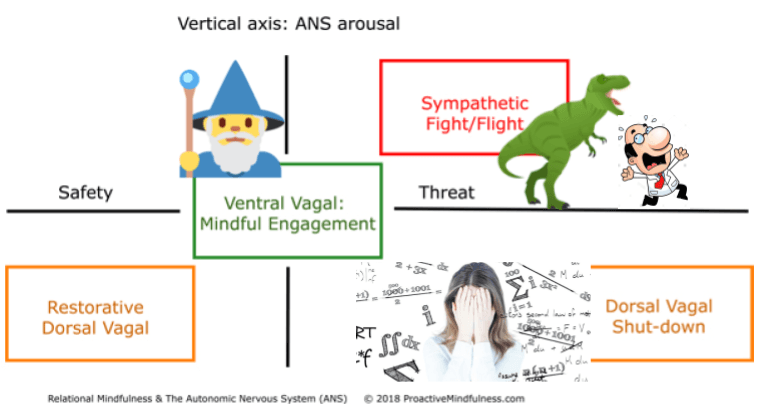

@AskYatharth @drossbucket Hm, maybe 3 modes to explore? 😖 Anxiety / Paralysis: My awareness exceeds my grasp 🦖 Unintended Consequences: My grasp exceeds my awareness 🧙♂️Wizard: "I can grok all needed context & manipulate symbols fluidly"

@AskYatharth @drossbucket @Malcolm_Ocean ...? https://t.co/LJQzSnoYDp

@SarahAMcManus I think the metaphors (abstractions, really) we use in programming are of a quite specific nature that natural language metaphors tend to lack - the nice thing about natural language metaphors is their imprecision. The nice thing about programming metaphors is their precision.

@SarahAMcManus Or to look at it another way: The metaphors we use in natural language are mostly there to help us think about the problem in a different way, and so it doesn't matter if they represent things accurately, but a programming metaphor needs a precise translation into specific action

@DRMacIver Mhm! Or... there's something about the metaphors that I like best, where there's a kind of "click" feeling that has a geometric goodness-of-fit. It's not vague overall - some parts need to be very precise, while other parts are left fluid until their constraints become relevant

@DRMacIver Maybe it's like Richard Feynman and the "Green Hairy Ball Thing"? https://t.co/m5sBjY1wZQ

@SarahAMcManus Yeah I think it's definitely important that metaphors have this sort of goodness of fit thing, but you get to be sloppy about a lot of the bits that don't matter, or the things that don't matter here and now, because there's human judgement in the loop.

@DRMacIver And then because doing stuff with code isn't tethered to physically possible environments, I figure it makes some moves easy ("Oh, it's 10-dimensional")... but also you can accidentally open up infinite loops, or send things into the irretrievable void, or other weird moves