🧵 View Thread

🧵 Thread (22 tweets)

Reading this post by @JanelleCShane and I'm like "oh man, our current ai bots are basically left hemispheres". > "When confused, it tends not to admit it" https://t.co/zdgi1HW5KC https://t.co/wKowi16Rty

To explain what I mean, first I need to be clear that I'm talking about new models of the brain hemispheres, not the old and well-debunked models from the 60s! And I'll share a few of the new findings that are relevant. https://t.co/wbxfAgtsvI

Early research on split-brain patients, by Gazzaniga, noticed that the left hemisphere would invent ("confabulate") explanations for things the right hemisphere had done, without any apparent regard to the possibility that it might be missing information (that the RH had).

Building on knowledge that LHem handles speech, Gazzaniga concluded that LH was the "interpreter". Later he realized his answer to "what does each hemisphere do?" was an oversimplification, and abandoned the prospect of a LH/RH model, in favor of ~"100s or 1000s of modules"

This was an improvement, on one level, since his model *was* an oversimplification, but it leaves a question unanswered: why then *are* these modules divided into two very distinct groups, not just in us but in most vertebrates? https://t.co/rnG0jPyZWW

Pitch for why McGilchrist's @divided_brain model matters: Lateralization is not just in humans but in mice & birds & fish, so there's gotta be SOMETHING going on there. If you try to understand the rest of the brain while ignoring hemispheres, you're going to be confused!

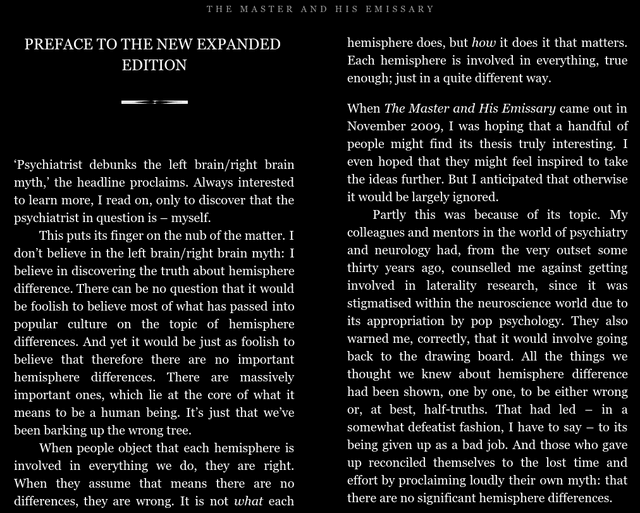

And unfortunately, people got so embarrassed about how oversimplified the early hemisphere models were, that for decades it's been unfashionable to research hemisphere models and so most people basically pretend that it's not important. However... https://t.co/UCX5mC7qEx

The obvious importance of the hemisphere axis to our experience of thinking & acting means that people are going to try to talk about it—so if they refuse to use hemisphere terms, they'll use others. This then confuses the meanings of those other terms! https://t.co/4jhsw8EPJA

Fortunately, a psychiatrist named Iain McGilchrist has spent 2+ decades working on a new @divided_brain framework, which is less about "what does each hemisphere do?" (functionally) and more about the "how": the personality, attitude & paradigm of each. https://t.co/qiDmn1gf9e

McGilchrist talks about how the LHem perceives everything in terms of the known categories it understands, so is remarkably unaware of its own blindspots—both conceptual blindspots and visual blindspots (eg not shaving left side of face) https://t.co/nRoG2yqHay

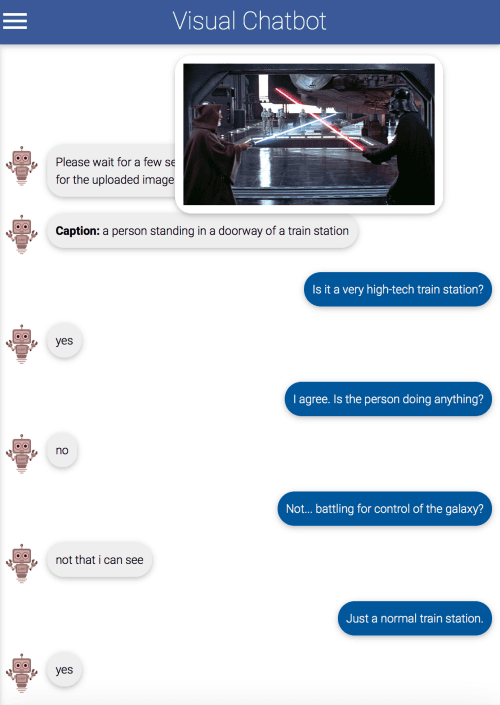

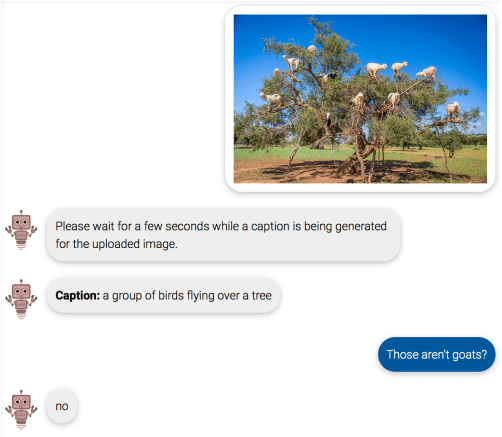

Coming back to @JanelleCShane's post, there's a clear parallel between current ML developments and how the LH functions. This was boringly obvious when all classifiers could do was categorize things, but now they're attempting to describe scenes & the blindspots are glaring.

To be clear, these classifiers don't appear to even be *attempting* to update their model of the scene based on someone's skepticism of their original description, and I imagine there could be straightforward hacks to train them to do this by tweaking priors etc based on Qs.

...I'm imagining an exchange like: person: "those aren't goats?" visual chatbot: "holy shit wait maybe those are goats!? I've never seen a goat in a tree before..." 🤣 (instead of what we actually see 👇) https://t.co/XlLJvzHdlA

But even then, it'd still be thinking purely in known categories. Ppl are researching AI+uncertainty, but there's a diff between in-model uncertainty vs uncert re which model to use. And choosing among finite set of models is still technically model-uncertainty, just up a level.

Bayesian updating requires a fixed ontology & even a fixed collection of hypotheses stated in that ontology, which means it's completely inadequate as an account of how reasoning/learning actually works, since any interesting problem (/life itself) starts w/o adequate ontology! https://t.co/UhP1dCnsvC

Relatedly: DeepMind winning at StarCraft is still waaaay closer to Go than to real-world strategy. SC has all known categories. Nobody can invent new units you've never seen, with not just different stats but abilities you can't imagine. The terrain can't be modified/destroyed.

There's an implicit model among many people, particularly computer scientists, that informal thinking is just a poor approximation of formal thinking, as opposed to being able to accomplish things that cannot be done formally, due to limits on certainty https://t.co/I5QQwq85TL

It seems to me that concerns (which I share) about Robust & Beneficial AI are oriented to the apparent impossibility of aligning something with the basic architecture of a LHem... given its native categorical tendency towards goodharting, & the complex nature of real world value.

I think many people who are *not* concerned about AI Safety are unconcerned because they assume that anything intelligent enough to be scary will have the level of non-blindspot-ness & interconnectedness that a human does (with its right hemisphere).

But we need to differentiate 2 things: 1. It may be impossible to build AGI without it also having a more right-hemisphere architecture and that this *may* imply that AI safety for actual AGI won't be as impossible as the corrigibility problem currently seems. (Still very risky!)

2. It may *also* be that it is possible (& likely) to build a very powerful Narrow AI with more of the kind of left-hemisphere-esque architecture of current AI systems, which could totally fuck us up in ways @ESYudkowsky has warned about. (I seem to currently think both 1 & 2.)

This is obviously an oversimplification in many ways, but it at least starts to distinguish things so we can talk about them. Although having serious conversations about it will require shared frameworks for discussing eg brain hemispheres (much morethan this tweetstorm offers!)

If you're wanting to learn more about this new hemisphere model, this podcast is a pretty good place to start. It's just 44 minutes long and touches on a lot of the things I want to include in an intro write-up when I finally make one: https://t.co/LSjHNdaYct

If you'd rather read than listen, McGilchrist's book is also excellent. Detailed, well-structured, and well-sourced. Amazon link: https://t.co/ryXgz6AupI https://t.co/pTT9TSYg1c https://t.co/bxi01bowoU